Fueling the Next Wave of AI Agents Building the Foundation for Future MCP Clients and Enterprise Data Access

How Wren Engine Powers the Semantic Layer for AI-Driven Workflows — Unlocking Context-Aware Intelligence Across the MCP Ecosystem

Howard Chi

Updated: Dec 18, 2025

Published: Mar 31, 2025

In the fast-moving world of artificial intelligence, new standards and technologies emerge frequently. Yet few have sparked as much excitement and grassroots innovation as the Model Context Protocol (MCP). Designed as an open standard for connecting Large Language Models (LLMs) with tools, databases, and other systems, MCP is becoming a foundational pillar in the age of AI agents. Although there are many impressive MCP demos and innovative applications emerging every day, we rarely see enterprises adopting these tools as part of their daily workflows.

For MCP to reach its full potential — especially in the enterprise — it must go beyond web automation or local file interactions. Without the right data, MCP remains very limited for enterprise use cases. Once we solve this critical challenge, we believe we’ll start seeing explosive new growth in AI adoption. At Wren AI, we see enabling AI to query the right data with full business context as essential — and that’s precisely where the Wren Engine comes in.

The Rise of MCP: Innovation Across the Community

The Model Context Protocol is a standardized, open framework that allows AI models to communicate with external services in a consistent, secure, and extensible way. It defines a common language for passing context, making it possible for different tools and services to interoperate with AI agents without reinventing the wheel each time.

In just a few months, thousands of MCP servers have been developed and shared within the community. These servers empower AI systems to interact with local applications, web services, cloud storage, and APIs through natural language prompts. There are a lot of great materials available on the internet showcasing various MCP demos and implementations.

By the time of 2025/3, there are about 4405 MCP servers on https://mcp.so/

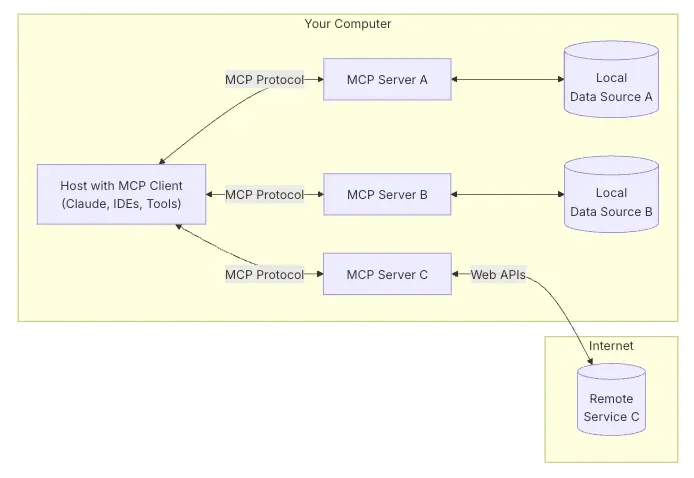

MCP is built on a simple but powerful client-server model. An MCP client is typically the AI assistant or LLM interface that initiates a request — such as asking a question, generating content, or executing a task. The MCP server is the responder that executes that request by connecting to a specific tool or data source and returning the structured result.

Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect devices to a wide range of peripherals and accessories, MCP offers a standardized way to connect AI models to various tools, databases, and external services. This standardization allows modular and scalable AI workflows across different environments and technology stacks.

General Architecture of MCP from MCP official site

A Glimpse into Today’s MCP Use Cases

- AI Personal Assistants: Imagine querying your calendar, browsing local files, or updating notes — all via natural language. MCP projects have made this seamless.

- Code Repository Management: Developers have built MCP integrations with GitHub, enabling AI to browse repositories, comment on code, and suggest fixes autonomously.

- Web Automation Agents: Agents powered by MCP can now automate repetitive web tasks like checking prices, booking meetings, or scraping reports.

These are impressive demonstrations of what’s possible with AI agents. But most of these solutions are still centered around individual workflows — running on local machines, handling personal data, or automating tasks in isolated environments.

The Enterprise Gap: When Data Matters More

At the enterprise level, the stakes — and the complexity — are much higher. Businesses run on structured data stored in cloud warehouses, relational databases, and secure filesystems. From BI dashboards to CRM updates and compliance workflows, AI must not only execute commands but also understand and retrieve the right data, with precision and in context.

While many community and official MCP servers already support connections to major databases like PostgreSQL, MySQL, SQL Server, and more, there’s a problem: raw access to data isn’t enough.

Enterprises need:

- Accurate semantic understanding of their data models

- Trusted calculations and aggregations in reporting

- Clarity on business terms, like “active customer,” “net revenue,” or “churn rate”

- User-based permissions and access control

Natural language alone isn’t enough to drive complex workflows across enterprise data systems. You need a layer that interprets intent, maps it to the correct data, applies calculations accurately, and ensures security.

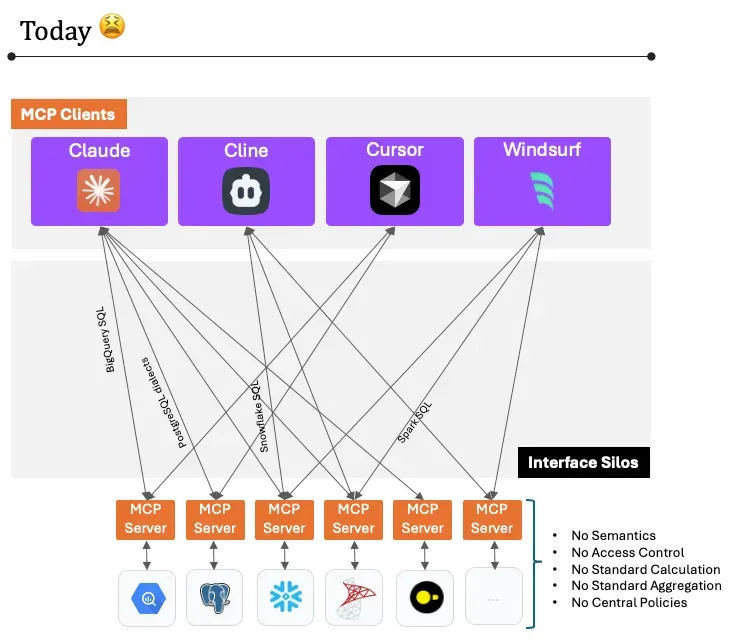

Today’s MCP servers connect to databases.

Today’s MCP servers connect to databases.

This is where most MCP database integrations today fall short.

Why Semantic Layers Are the Missing Link

The current generation of AI assistants excels at language — but falters when it comes to understanding your business. Without a semantic layer that defines how data is connected, what metrics mean, and how to perform consistent aggregations, AI is left guessing.

For example, when a user asks, “What’s our revenue in Q4 for new customers in EMEA?”, the system must:

- Know what “revenue” means in the business context

- Apply the correct time filters

- Join customer and sales data appropriately

- Recognize “new customers” as a derived attribute

Doing this accurately and consistently across teams is impossible without a semantic foundation. That’s why we built the Wren Engine.

Introducing Wren Engine: The Semantic Engine Powering the Semantic Layer for Future MCP Clients and AI Agents

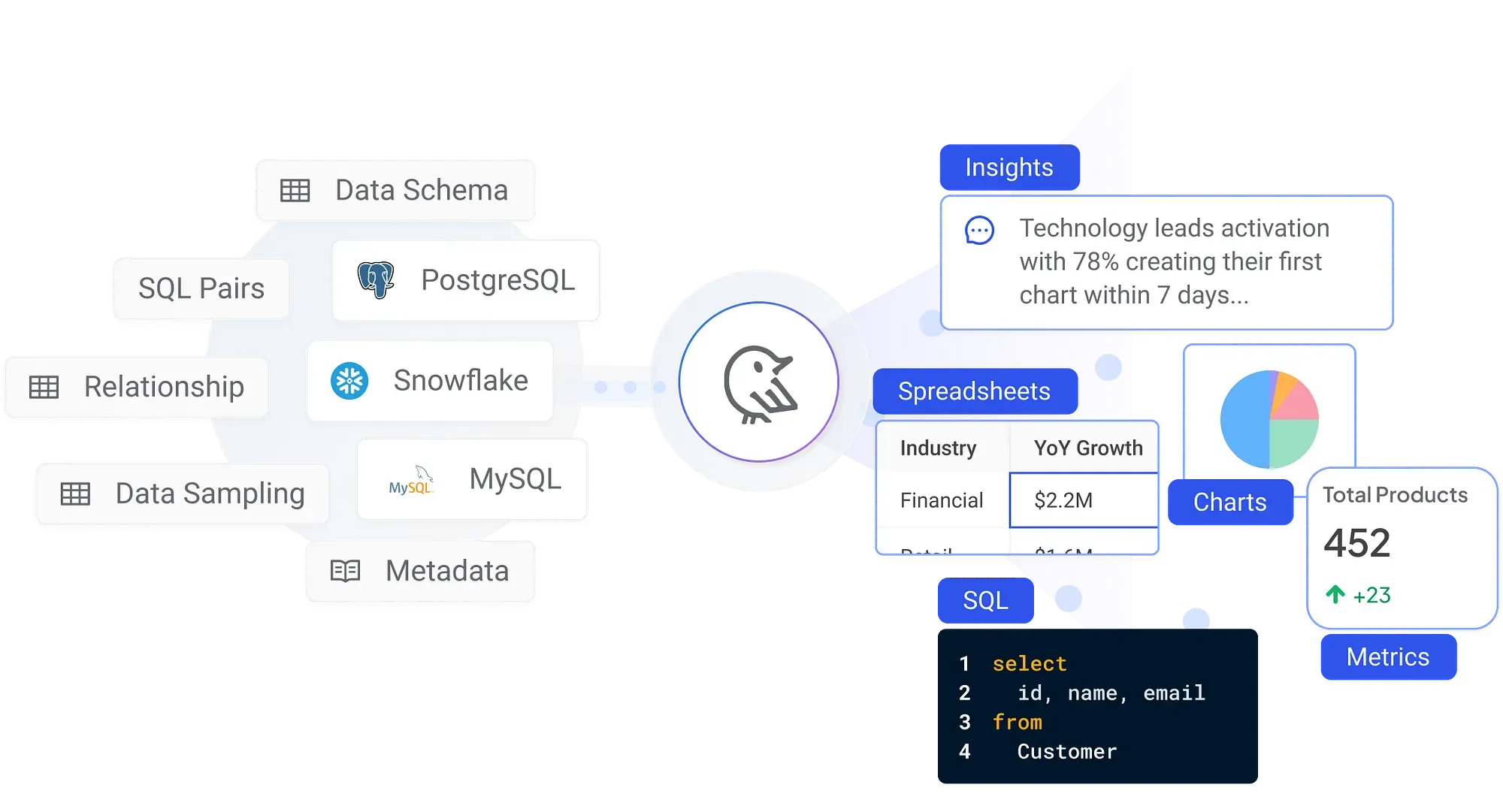

The Wren Engine is an open-source semantic engine powering the semantic layer for AI-driven data access. This powerful engine is designed to enable hundreds and potentially thousands of future MCP clients to retrieve the right data from their databases seamlessly and accurately. By building the semantic layer directly into MCP clients, such as Claude, Cline, Cursor, etc. Wren Engine empowers AI agents with precise business context and ensures accurate data interactions across diverse enterprise environments.

The Future of MCP Ecosystem with Wren Engine

Key Capabilities of the Wren Engine

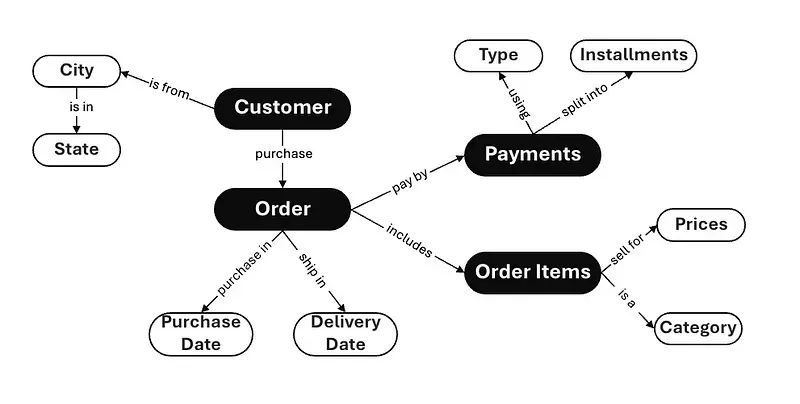

1. Semantic Modeling

Wren Engine enables you to define your business logic, relationships, metrics, and KPIs in a structured, graph-based format. This semantic model maps your natural language requests to the correct data with precision.

2. Real-Time SQL Rewrite

Using metadata and user context, Wren Engine rewrites AI-generated SQL in real time — applying necessary joins, filters, calculations, and access controls.

3. Business Context Awareness

Rather than relying on fragile prompt engineering, Wren Engine gives AI agents a robust layer of understanding about your business: what “net revenue” means, how a “customer cohort” is defined, and which segments apply to which teams.

4. Native Support for Major Databases

Whether you use PostgreSQL, MySQL, Snowflake, or Microsoft SQL Server, Wren Engine integrates easily and maintains high performance.

5. Secure Role-Based Access

It handles authentication and data visibility based on user roles, so queries respect enterprise-level governance requirements.

Driving Enterprise Adoption: From LLM Playground to AI-Powered BI

As AI adoption accelerates in the enterprise, teams are experimenting with LLMs in all kinds of workflows — from sales support to operations. However, many of these experiments stall when AI can’t answer basic questions like:

- “What’s our MRR last month by region?”

- “Which customers have churned in the last 60 days?”

- “Show me marketing leads that converted to deals this quarter.”

It’s not that AI can’t generate SQL. It’s that without a semantic layer, it doesn’t know what to query. And that limits its ability to create value.

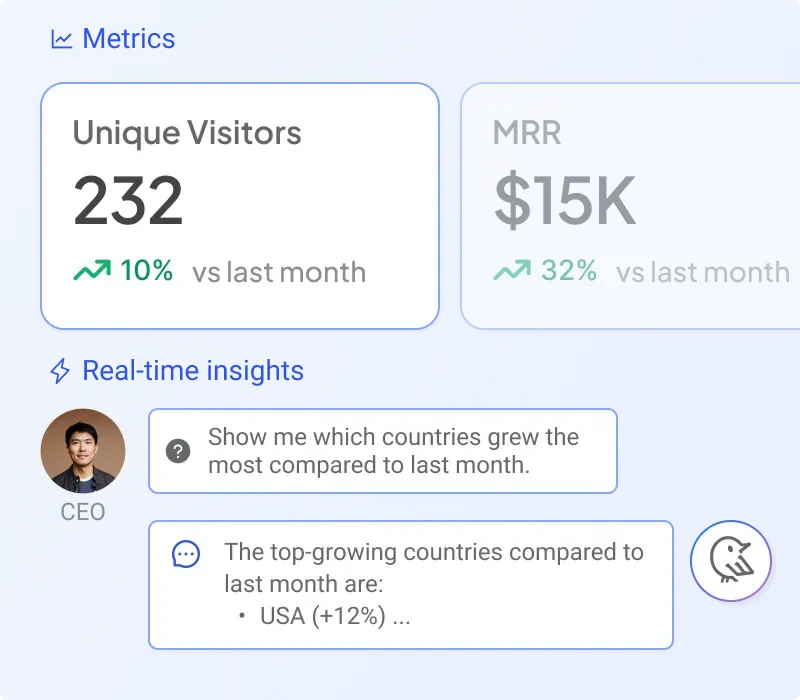

By deploying Wren Engine-powered MCP servers, enterprises can:

- Empower teams to ask business questions in natural language

- Generate trusted, explainable insights instantly

- Maintain consistency across data definitions and dashboards

- Scale AI usage securely across departments

This is how we move from individual proof-of-concepts to enterprise-grade productivity.

Endless Possibilities and Imagination with Wren Engine and MCP Servers

To better understand the impact, let’s look at how Wren Engine unlocks value by connecting with various powerful MCP clients in real-world enterprise workflows:

Preplexity + Wren Engine: Smart Knowledge Retrieval

Task: An analyst wants a reliable answer backed by company data and external sources.

With Wren Engine: Preplexity MCP retrieves answers based on both internal knowledge base and connected database via Wren Engine — ensuring the insight is accurate and grounded in company context.

HubSpot + Wren Engine: Intelligent CRM Updates

Task: A marketing ops manager wants to find and update leads in the CRM.

With Wren Engine: Ask “Update lifecycle stage for leads from last campaign with no engagement” — HubSpot MCP pulls CRM records and Wren Engine powers the logic behind filters and updates.

Zapier + Wren Engine: Workflow Automation

Task: Automate a report summary each time a sales deal closes.

With Wren Engine: Zapier MCP triggers the pipeline, and Wren Engine queries the semantic data model to generate deal summaries based on contextual metrics.

Google Docs + Wren Engine: AI-Powered Document Search

Task: A legal team member needs to find contracts expiring this quarter.

With Wren Engine: Google Docs MCP accesses document storage and Wren Engine identifies relevant files based on semantic understanding of metadata and content.

What’s Next: Building the Semantic Future of AI

We’re entering a new era of generative AI, where language is the interface — but data is the fuel. For AI to fulfill its promise in the enterprise, it must speak the language of business data fluently, securely, and accurately.

The Interface for MCP Clients to any databases

At Wren AI, we believe the most powerful AI systems won’t just generate words — they’ll generate truth. That means making sure every chart, metric, and insight is rooted in semantic accuracy.

By combining MCP’s extensibility with the semantic intelligence of Wren Engine, we’re creating a new standard for how businesses interact with data using AI.

MCP is one of the most exciting developments in the open AI ecosystem today. It empowers the community to rapidly build integrations that make LLMs more useful, capable, and dynamic.

But for enterprise adoption to truly take off, we must solve the hard problem of data context — ensuring that AI doesn’t just say things confidently, but says things correctly.

That’s the mission of Wren Engine.

If you’re building MCP-powered agents or exploring how generative BI can transform your company, let’s talk. Your data already holds the answers — Wren Engine just helps your AI find them.

Wren Engine is Fully Open Source!

Explore Wren Engine’s capabilities firsthand by visiting 🔗our GitHub repository, license under Apache 2.0.

Try it yourself by connecting your MCP clients to Wren Engine and witness the magic of semantic data access. The Wren Engine MCP server is still in its early phase, and we are actively improving it. We warmly invite you to join our community and help accelerate the future of MCP.

Supercharge Your

Data with AI Today

Join thousands of data teams already using Wren AI to make data-driven decisions faster and more efficiently.

Start Free TrialRelated Posts

Related Posts

Powering Semantic SQL for AI Agents with Apache DataFusion

Bridge the Gap Between AI and Enterprise Data with a High-Performance Semantic Layer and Unified SQL Interface for Model Context Protocol (MCP)

Powering AI-driven workflows with Wren Engine and Zapier via the Model Context Protocol (MCP)

Use semantic understanding, SQL generation, and email automation to streamline data requests with LLM agents and Wren’s powerful query engine.